在STM32上运行keyword spotting(四)边缘设备运行以及测试

如果不将模型部署在边缘设备上加以使用,那我们的模型将毫无意义

怎样在STM32上运行神经网络以及进行推理

STM32最常用使用C语言开发,也有少许用micropython或者是lua开发,但是这些都不在主流范围内,再者说在STM32上使用torch等网络推理框架也是相当不现实。因此我们需要在C环境下使用神经网络推理框架,经过我的寻找,在github上找到了这几种使用C语言实现的推理架构:sipeed/TinyMaix,xboot/libonnx,这两个推理框架使用起来较为简单,但是存在一个很明显的缺点:无法使用arm芯片上的FPU加速;因此cmsis也推出了适用于arm芯片的推理框架:cmsis-nn,这个框架可以调用芯片上的FPU实现硬件加速,消耗更少的时间实现更快的推理。但是,cmsis-nn使用起来还是有一些不方便,不如使用STM旗下的cube中的内置工具:cube.ai,可以更快的实现模型部署,cube.ai的使用过程详见:点击这里,具体来说就是:1、安装cube.ai的扩展 2、选择应用模式 3、导入模型校验 4、生成工程文件 5、编程进行推理。下面我将详细的阐述各个步骤。

为你的cube安装AI扩展

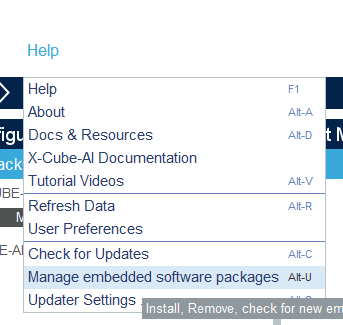

在help中选择管理嵌入式软件包

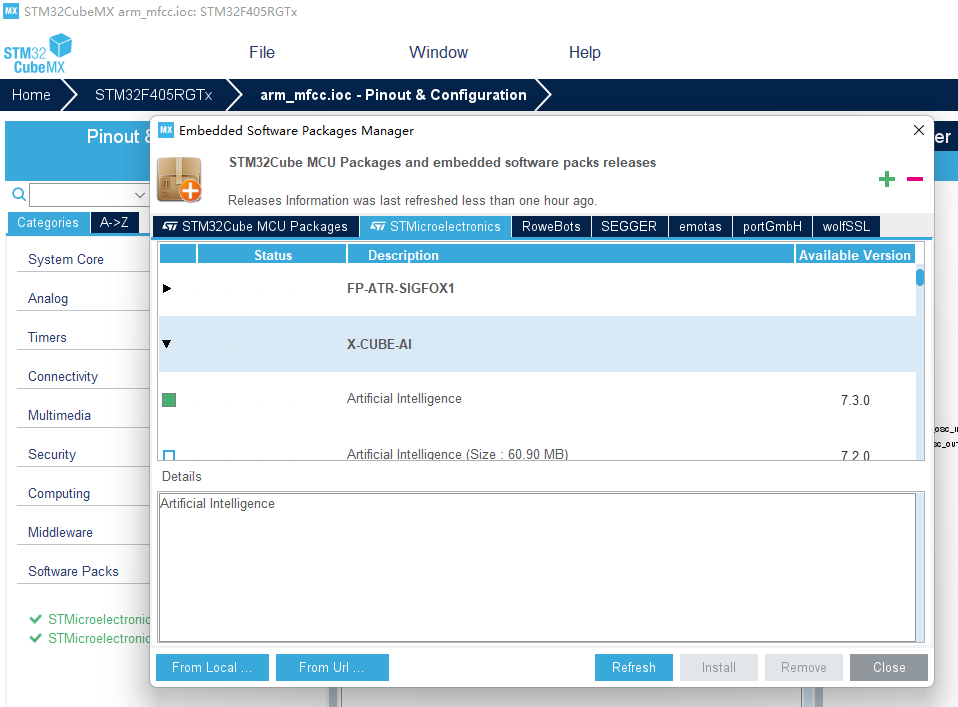

在相应的栏目下选择X-CUBE-AI,安装最新版本,可能需要一些时间

创建工程

给你的芯片创建一个标准工程,并打开串口等所需要的外设,再次不再陈述

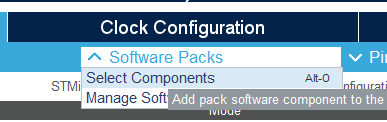

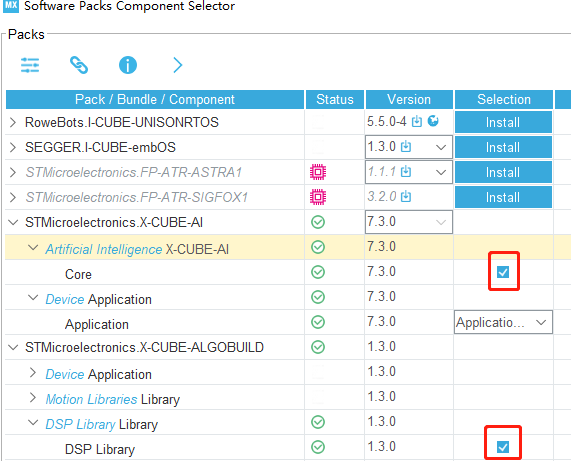

选择导入神经网络软件包,并且导入DSP包,后续MFCC算法需要使用

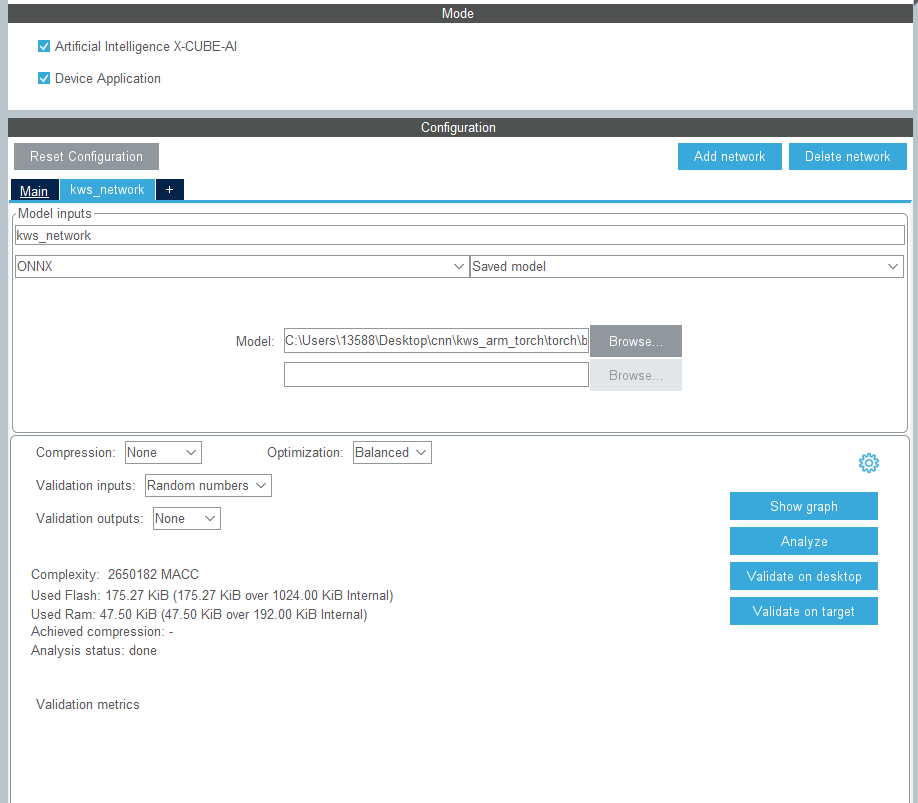

新建网络模型

在此新建一个网络模型,我将其命名为kws_network,格式选择ONNX模式,并且模型选择上一篇文章中最后生成的onnx文件,我们可以通过压缩模型等选项降低RAM与ROM的占用,我在这里没有进行压缩

点击分析按钮,如果模型没问题,会生成该模型的所有信息,以及运行时的占用等信息,例如:

Analyzing model

C:/Users/13588/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/7.3.0/Utilities/windows/stm32ai analyze --name kws_network -m C:/Users/13588/Desktop/cnn/kws_arm_torch/torch/best_model.onnx --type onnx --compression none --verbosity 1 --workspace C:\Users\13588\AppData\Local\Temp\mxAI_workspace7289868438037005923542168573192280 --output C:\Users\13588\.stm32cubemx\network_output --allocate-inputs --allocate-outputs

Neural Network Tools for STM32AI v1.6.0 (STM.ai v7.3.0-RC5)

Exec/report summary (analyze)

----------------------------------------------------------------------------------------------------------

model file : C:\Users\13588\Desktop\cnn\kws_arm_torch\torch\best_model.onnx

type : onnx

c_name : kws_network

compression : none

options : allocate-inputs, allocate-outputs

optimization : balanced

target/series : generic

workspace dir : C:\Users\13588\AppData\Local\Temp\mxAI_workspace7289868438037005923542168573192280

output dir : C:\Users\13588\.stm32cubemx\network_output

model_fmt : float

model_name : best_model

model_hash : 48c47d921648a380f1253c708d25834e

params # : 44,870 items (175.27 KiB)

----------------------------------------------------------------------------------------------------------

input 1/1 : 'input' (domain:activations/**default**)

: 490 items, 1.91 KiB, ai_float, float, (1,49,10,1)

output 1/1 : 'output' (domain:activations/**default**)

: 6 items, 24 B, ai_float, float, (1,1,1,6)

macc : 2,650,182

weights (ro) : 179,480 B (175.27 KiB) (1 segment)

activations (rw) : 48,640 B (47.50 KiB) (1 segment) *

ram (total) : 48,640 B (47.50 KiB) = 48,640 + 0 + 0

----------------------------------------------------------------------------------------------------------

(*) 'input'/'output' buffers can be used from the activations buffer

Model name - best_model ['input'] ['output']

-----------------------------------------------------------------------------------------------

id layer (original) oshape param/size macc connected to

-----------------------------------------------------------------------------------------------

21 fc1_weight (Gemm) [h:3840,c:6] 23,040/92,160

fc1_bias (Gemm) [c:6] 6/24

-----------------------------------------------------------------------------------------------

0 input () [b:1,h:49,w:10,c:1]

-----------------------------------------------------------------------------------------------

1 x (Conv) [b:1,h:24,w:5,c:64] 2,624/10,496 307,264 input

-----------------------------------------------------------------------------------------------

2 input_1 (Relu) [b:1,h:24,w:5,c:64] 7,680 x

-----------------------------------------------------------------------------------------------

3 x_3 (Conv) [b:1,h:24,w:5,c:64] 640/2,560 69,184 input_1

-----------------------------------------------------------------------------------------------

4 input_4 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_3

-----------------------------------------------------------------------------------------------

5 x_7 (Conv) [b:1,h:24,w:5,c:64] 4,160/16,640 491,584 input_4

-----------------------------------------------------------------------------------------------

6 input_8 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_7

-----------------------------------------------------------------------------------------------

7 x_11 (Conv) [b:1,h:24,w:5,c:64] 640/2,560 69,184 input_8

-----------------------------------------------------------------------------------------------

8 input_12 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_11

-----------------------------------------------------------------------------------------------

9 x_15 (Conv) [b:1,h:24,w:5,c:64] 4,160/16,640 491,584 input_12

-----------------------------------------------------------------------------------------------

10 input_16 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_15

-----------------------------------------------------------------------------------------------

11 x_19 (Conv) [b:1,h:24,w:5,c:64] 640/2,560 69,184 input_16

-----------------------------------------------------------------------------------------------

12 input_20 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_19

-----------------------------------------------------------------------------------------------

13 x_23 (Conv) [b:1,h:24,w:5,c:64] 4,160/16,640 491,584 input_20

-----------------------------------------------------------------------------------------------

14 input_24 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_23

-----------------------------------------------------------------------------------------------

15 x_27 (Conv) [b:1,h:24,w:5,c:64] 640/2,560 69,184 input_24

-----------------------------------------------------------------------------------------------

16 input_28 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_27

-----------------------------------------------------------------------------------------------

17 x_31 (Conv) [b:1,h:24,w:5,c:64] 4,160/16,640 491,584 input_28

-----------------------------------------------------------------------------------------------

18 onnxMaxPool_26 (Relu) [b:1,h:24,w:5,c:64] 7,680 x_31

-----------------------------------------------------------------------------------------------

19 onnxReshape_27 (MaxPool) [b:1,h:12,w:5,c:64] 7,680 onnxMaxPool_26

-----------------------------------------------------------------------------------------------

20 onnxGemm_29 (Reshape) [b:1,c:3840] onnxReshape_27

-----------------------------------------------------------------------------------------------

21 output (Gemm) [b:1,c:6] 23,046 onnxGemm_29

-----------------------------------------------------------------------------------------------

model/c-model: macc=2,650,182/2,650,182 weights=179,480/179,480 activations=--/48,640 io=--/0

Number of operations per c-layer

----------------------------------------------------------------------------------

c_id m_id name (type) #op (type)

----------------------------------------------------------------------------------

0 2 x (conv2d) 314,944 (smul_f32_f32)

1 4 x_3 (conv2d) 76,864 (smul_f32_f32)

2 6 x_7 (conv2d) 499,264 (smul_f32_f32)

3 8 x_11 (conv2d) 76,864 (smul_f32_f32)

4 10 x_15 (conv2d) 499,264 (smul_f32_f32)

5 12 x_19 (conv2d) 76,864 (smul_f32_f32)

6 14 x_23 (conv2d) 499,264 (smul_f32_f32)

7 16 x_27 (conv2d) 76,864 (smul_f32_f32)

8 19 x_31 (optimized_conv2d) 506,944 (smul_f32_f32)

9 20 onnxGemm_29_to_chlast (transpose) 0 (smul_f32_f32)

10 21 output (dense) 23,046 (smul_f32_f32)

----------------------------------------------------------------------------------

total 2,650,182

Number of operation types

---------------------------------------------

smul_f32_f32 2,650,182 100.0%

Complexity report (model)

---------------------------------------------------------------------------------

m_id name c_macc c_rom c_id

---------------------------------------------------------------------------------

21 fc1_weight | 0.9% |||||||||||||||| 51.4% [10]

2 input_1 |||||||||| 11.9% || 5.8% [0]

4 input_4 ||| 2.9% | 1.4% [1]

6 input_8 ||||||||||||||| 18.8% ||| 9.3% [2]

8 input_12 ||| 2.9% | 1.4% [3]

10 input_16 ||||||||||||||| 18.8% ||| 9.3% [4]

12 input_20 ||| 2.9% | 1.4% [5]

14 input_24 ||||||||||||||| 18.8% ||| 9.3% [6]

16 input_28 ||| 2.9% | 1.4% [7]

19 onnxReshape_27 |||||||||||||||| 19.1% ||| 9.3% [8]

20 onnxGemm_29 | 0.0% | 0.0% [9]

---------------------------------------------------------------------------------

macc=2,650,182 weights=179,480 act=48,640 ram_io=0

Creating txt report file C:\Users\13588\.stm32cubemx\network_output\kws_network_analyze_report.txt

elapsed time (analyze): 3.650s

Getting Flash and Ram size used by the library

Model file: best_model.onnx

Total Flash: 201396 B (196.68 KiB)

Weights: 179480 B (175.27 KiB)

Library: 21916 B (21.40 KiB)

Total Ram: 53424 B (52.17 KiB)

Activations: 48640 B (47.50 KiB)

Library: 4784 B (4.67 KiB)

Input: 1960 B (1.91 KiB included in Activations)

Output: 24 B (included in Activations)

Done

Analyze complete on AI model生成工程文件,移植MFCC算法进去,启用DSP功能,重写推理函数

移植MFCC算法和启用DSP功能不详细阐述,MFCC无非是导入几个源文件,添加以下源文件和头文件路径,启用DSP功能可以在百度上找到详细的解决方案,在编译时会遇到一个宏定义错误,手动屏蔽即可。另一个需要注意的是,传统的重定向方法好像无法正常使用,用以下办法重写:

uint8_t UartTxBuf[200];

void Debug(const char *format,...)

{

uint16_t len;

va_list args;

va_start(args,format);

len = vsnprintf((char*)UartTxBuf,sizeof(UartTxBuf)+1,(char*)format,args);

va_end(args);

HAL_UART_Transmit(&huart1, UartTxBuf, len,0xff);

}重新实现推理接口

在app_x-cube-ai.c中加入以下函数,并在头文件中声明让外部调用:

uint8_t kws_init(void)

{

ai_error err;

/* Create and initialize an instance of the model */

err = ai_kws_network_create_and_init(&kws_network, data_activations0, NULL);

if (err.type != AI_ERROR_NONE) {

ai_log_err(err, "ai_kws_network_create_and_init");

return -1;

}

ai_input = ai_kws_network_inputs_get(kws_network, NULL);

ai_output = ai_kws_network_outputs_get(kws_network, NULL);

}

uint8_t kws_run(const void* input,void* output)

{

ai_input->data = AI_HANDLE_PTR(input);

ai_output->data = AI_HANDLE_PTR(output);

return ai_kws_network_run(kws_network, ai_input, ai_output);

}在main函数中,调用kws_init()初始化网络结构,并且编写这个函数调用接口:

float out_data[6];

void kws_audio_process(const int16_t * audio_data)

{

float *ret_data;

uint8_t max_index = 0;

int32_t now = HAL_GetTick();

ret_data = KWS_MFCC_compte(audio_data);

kws_run(ret_data,out_data);

int32_t end = HAL_GetTick();

Debug("speed time:%d ",end-now);

for(int i =0;i<6;i++)

{

if(out_data[i]>out_data[max_index])

{

max_index = i;

}

}

Debug("result is %d\r\n",max_index);

}调用的方式为:

Debug("test 1->");

kws_audio_process(audio_one);

Debug("test 2->");

kws_audio_process(audio_two);

Debug("test 3->");

kws_audio_process(audio_three);

Debug("test 4->");

kws_audio_process(audio_four);

Debug("test 5->");

kws_audio_process(audio_five);上面的audio_one~audio_five怎么来的呢?使用kws_arm_torch\tools\wav2pcm下面的wav2pcm.py脚本,可以将本地中的wav文件生成为.h文件,供C语言直接调用。

编译烧录后的最终结果,其实最终生成的结果是全连接完之后的结构,在里面筛选出最大数据的索引即可:

test 1->speed time:220 result is 1

test 2->speed time:221 result is 2

test 3->speed time:221 result is 3

test 4->speed time:221 result is 4

test 5->speed time:221 result is 5开源地址:kws_arm_torch

本作品采用 知识共享署名-相同方式共享 4.0 国际许可协议 进行许可。

田帅康学习笔记

田帅康学习笔记